Gabo Esquivel - Backend Development Experience

My journey into backend development began with a fascination for how systems communicate behind the scenes. While many developers were focused on creating visually appealing interfaces, I found myself drawn to the architecture that powers these experiences. I still remember the first time I built a real-time data pipeline that seamlessly connected disparate systems—watching data flow through the infrastructure I'd created felt like solving an intricate puzzle. Throughout my career, I've approached backend engineering with a focus on building reliable foundations that enable innovation at the application layer, believing that thoughtful system design creates both technical stability and business agility.

Early Node.js & Community Building

I adopted Node.js in Latin America around 2010-2011, when server-side JavaScript was just emerging as a viable backend technology. What excited me about Node wasn't just the technical aspects, but its potential to unify frontend and backend development under a single language. To build a regional community around this technology, I founded Costa Rica JS to promote server-side JavaScript throughout Central America. Through NodeSchool workshops and regular meetups, I helped local developers bridge the gap between client and server development, creating a thriving ecosystem that continues today.

In 2014, I applied this expertise to build 4Tius Fitness Data Tracker, implementing a fitness analytics platform with AWS, MongoDB, and Node.js. This project presented unique challenges in data synchronization and real-time processing—I remember the satisfaction of seeing activity data stream from wearable devices into our system and transform into actionable insights almost instantly. The system processed wearable device data and served analytics via RESTful APIs, leveraging Node's event-driven architecture for real-time data handling in ways that would have been much more complex with traditional backend technologies.

Financial Systems & Digital Banking

In 2015, I joined Wink, Costa Rica's first neo-bank, where I faced the significant challenge of developing backend services that had to integrate with traditional banking systems while delivering a modern digital experience. The technical complexity was matched only by the regulatory requirements—I recall spending weeks ensuring our transaction processing met both security standards and compliance rules while still providing a seamless user experience. I implemented secure biometric authentication and transaction processing systems, laying the foundation for my later work in financial applications. This experience taught me the importance of building resilient systems that could handle sensitive financial data with both security and performance in mind—a balance that would become increasingly important in my later work with blockchain technologies.

Entering Blockchain Infrastructure

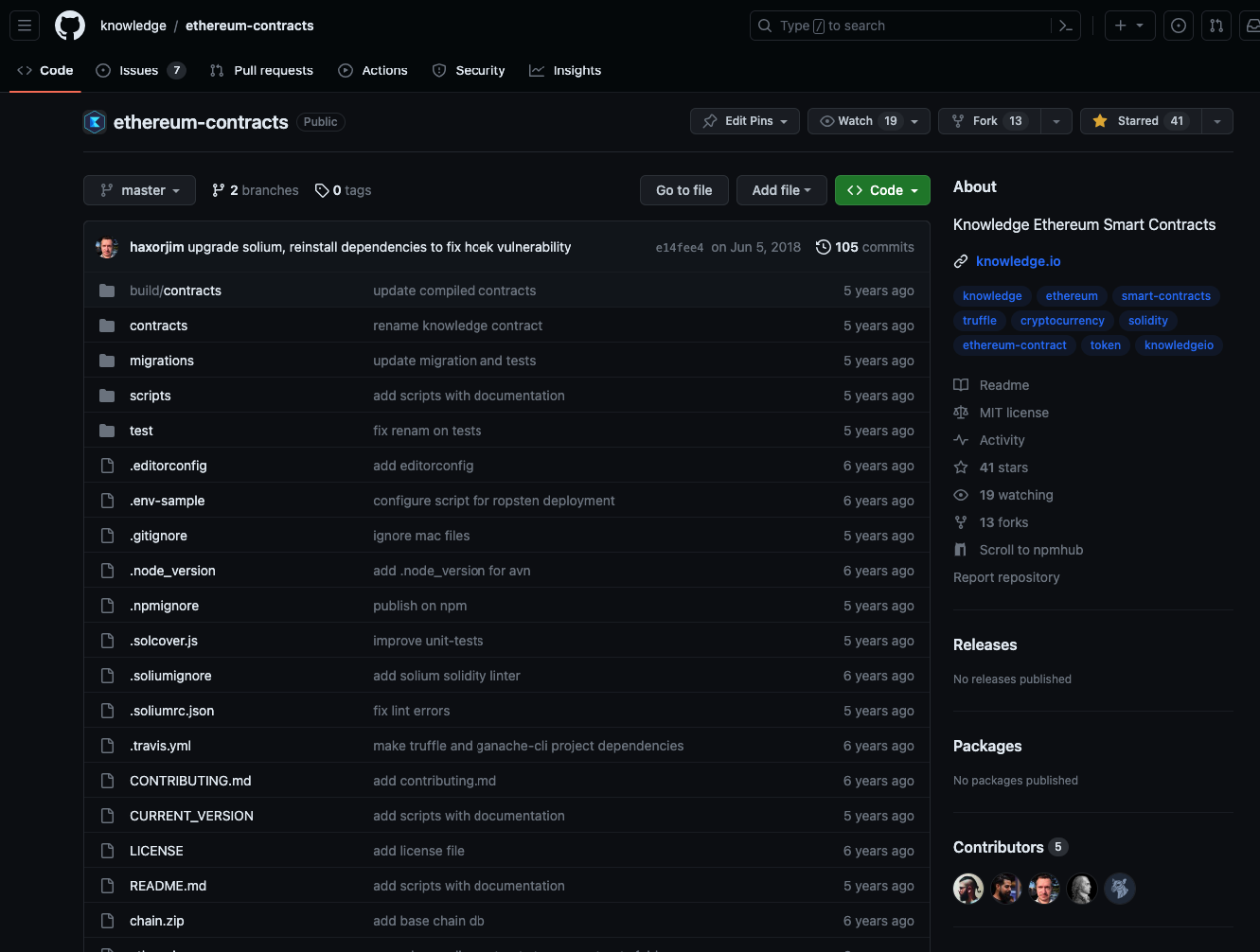

Building on my experience with traditional financial systems, I ventured into blockchain development in 2017 at Knowledge.io, where I built backend services for their token rewards dashboard, implementing systems to manage expertise metrics and decentralized identity. What fascinated me most during this period was the paradigm shift from centralized to distributed systems—rethinking authentication, data persistence, and transaction processing in entirely new ways.

From 2018 to 2020 at EOS Costa Rica, I worked extensively with blockchain infrastructure, playing a key role in the EOS mainnet launch and managing validators for multiple networks. I still remember the precise moment when blocks began producing on the mainnet—after months of preparation and testing, seeing the network come alive was exhilarating. A highlight of this period was building a custom private blockchain for Grant Thornton to handle intercompany transactions and tax management using stablecoins, with immutable audit trails. This enterprise implementation demonstrated how blockchain technology could solve real business problems with enhanced transparency and efficiency—bridging the gap between emerging tech and traditional institutional needs.

Data Infrastructure & GraphQL

For EOS Rate, I developed backend services to support the community-driven Block Producer rating tool, implementing data aggregation and secure voting mechanisms that became widely adopted in the EOS ecosystem. The challenge of creating a system that was both transparent enough for community trust and secure enough to prevent manipulation taught me valuable lessons about designing public-facing systems.

In 2020, I created ChainGraph, a specialized real-time GraphQL toolkit for blockchain applications. This project emerged from my frustration with existing tools for blockchain data access—I wanted to create something that made complex on-chain data accessible through modern API patterns. The moment when I first queried complex blockchain data with a simple GraphQL request and watched real-time updates stream into a test application felt like breaking through a barrier that had been limiting developer productivity. ChainGraph featured sophisticated blockchain data indexing and event streaming capabilities, showcasing how proper data modeling could transform complex blockchain interactions into developer-friendly experiences. Beyond the technical achievements, this project reinforced my belief that reducing friction for developers is essential for broader blockchain adoption.

Specialized Data Indexing

Building on my blockchain data experience, I developed specialized data indexing solutions for various projects. At RareMint (2021), I built an EVM NFT data indexer using Moralis streams to support their NFT marketplace for digitized sports collectibles. The technical challenge of efficiently indexing NFT metadata and transaction history while maintaining real-time updates pushed me to develop new approaches to blockchain data processing.

At BitLauncher (2022-2023), I built a custom indexer for EOS EVM using Node.js with Viem EVM event subscriptions, enabling real-time transaction monitoring and analytics. The complexity of processing cross-chain transactions required creative solutions for data consistency and synchronization between different blockchain networks. For Opyn (2023), I set up a Ponder indexing service with PostgreSQL for chain data, creating efficient data structures for options protocol information. This work with financial derivatives data required particularly careful attention to data accuracy and performance, as options pricing and positions needed to update in near real-time for traders.

Cloud Architecture & DevOps

Throughout my career, I've implemented modern cloud architecture principles across my backend systems. At Knowledge.io (2017), I built scalable serverless infrastructure using AWS to support their ICO platform. I remember the challenge of designing systems that could handle unpredictable traffic spikes during token sales—creating architectures that would automatically scale without manual intervention. For BitcashBank (2020-2021), I designed cloud infrastructure focused on handling financial transactions with high availability and security requirements, implementing redundancy patterns that ensured system reliability even during partial outages.

My experience spans AWS, GCP, and various serverless technologies, allowing me to build scalable, cost-efficient solutions tailored to specific project needs. As a fullstack developer, I connect these backend systems with modern interfaces, implementing CI/CD pipelines, container orchestration, and infrastructure as code practices to ensure reliable deployments and operations. This integrated approach has been particularly valuable when building systems that need to evolve rapidly while maintaining stability—creating a foundation that supports innovation without sacrificing reliability.

AI & Vector Search Systems

Since 2023, I've focused increasingly on integrating AI capabilities into backend systems. At Masterbots (2023-2024), I developed specialized AI agents and chatbot interfaces, implementing backends that efficiently process complex user queries while managing large knowledge bases. The challenge of creating systems that could understand natural language while delivering precise information pushed me to explore advanced retrieval techniques and context management approaches.

For BitLauncher (2022-2024), I implemented a chatbot assistant for document retrieval using the Vercel AI SDK, enhancing user interaction and information access. Most recently, for LegalAgent's AI assistant (2024-2025), I built a RAG architecture that handles sensitive legal documents while maintaining strict privacy requirements. This project was particularly meaningful as it required balancing powerful AI capabilities with stringent privacy controls—ensuring that sensitive legal information remained protected while still being accessible through natural language queries. These projects showcase my ability to build retrieval-augmented generation systems using vector databases like Supabase with pgvector, enabling sophisticated, context-aware AI interactions that augment human capabilities rather than simply automating tasks.

Backend Projects

Let's Work Together

I typically work through remote 1099 contracts via my US-based company, Blockmatic Labs LLC. This setup gives clients straightforward contracts, built-in compliance, and IP protection. Based in Costa Rica, I operate on US Mountain Time and am just a short flight from major US cities.

If you’re exploring something ambitious—a decentralized system, an AI-powered product, or an idea that needs a strong technical foundation—I’d be happy to hear from you.

Latest Backend Articles

Intent-Based Multichain DeFi

October 15, 2025

Prompt Injection

June 15, 2025

AI Model Context Protocol (MCP)

June 8, 2025

RAG with TypeScript

June 2, 2025

Scaling and Securing WebSocket Connections

May 16, 2025

EVM Token Standards Overview

May 15, 2025

Managing Risks in DeFi

May 14, 2025

AMMs, Liquidity Pools & Yield Farming

May 12, 2025